Consider

a recent MIT Review article about the latest 3D printing lab experiments. What is their importance to inventors and what can we use to predict evolution of this technology?

When we study history, especially, history of innovation, people conventionally mention the

Stone Age, the

Bronze Age, the

Iron Age, etc. At the core of such descriptions lies a wonder material — stone, bronze, iron, steel, silicon — something that enables a huge range of applications, which power technology developments for decades or even hundreds and thousands of years.

Paradoxically, there's

no Clay Age (see fig below).

This is really unfortunate because the clay turned out to be the ultimate material that served us, humans, for thousands of years and enabled us to produce an amazing range of objects and technologies: from bricks to construction and architecture, from jars to storage and shipping, from ceramics to chemistry and modern waterworks, from concrete to skyscrapers and highway transportation systems. From an inventor's perspective, I see clay-based technologies as the first example of what we call today

additive manufacturing.

Let's go back few thousands of years and compare stone (Before) and clay (After) as manufacturing materials. If you live in a cave and use stone to make your

tools you have to chip away, blow-by-blow, certain parts of the original piece of rock that don't fit your design.

Even when we consider "raw" rocks being cheap and disregard the waste of material itself, our ability to shape the rock or change its internal physical structure is severely limited by what we can find in nature. By contrast, clay is extremely malleable: you can shape it, add filaments, make it hollow, make it solid, make it hard, glaze it, and much more. If you are a hunter-gatherer, by combining clay and fire you can create all kinds of sharp weapons that your stone age competition can't even imagine. If you are a gatherer, you can create jars and jugs, using one of the cornerstone inventions of human civilization: the

Potter's Wheel.

If you are a house builder, even a primitive one, you can use mud bricks and reinforce them with straw. As you master fire and masonry, you learn how to create bricks and construct buildings that last decades and centuries, instead of years. You can even print money tokens with appropriate clay technologies! Furthermore, with advanced firing techniques, you discover how to melt and shape metals and discover important alloys, such as Bronze. Ultimately, you develop communities of innovation and economies of scale unheard of in the Stone Age.

Why thinking about the Clay Age is important today, when we are well beyond using mud for building cities? The main goal is to gain an insight into what additive manufacturing can do for us for years to come. Just like clay, 3D printing represents a technology approach with a promising long-term potential. That is, when working with both, clay and 3D printing, instead of removing and wasting extra, we add materials and shape surfaces to achieve desired designs. Luckily, for 3D printing we can leverage the learnings from clay.

Over the thousands of years, humans learned to work with clay by combining 6 key modifying methods:

1. Shape - change the outer geometry (e.g. brick).

2. Thin or thicken - change the inner geometry (e.g. thin jar).

3. Fill - change the inner structure (e.g. reinforced concrete)

4. Fire - modify inner and/or outer hardness or other material properties (e.g. hardened stove brick)

5. Slip - modify or create an outer layer with specific properties (e.g. ceramic glazes)

6. Decorate - paint or other exterior designs to make things aesthetically appealing.

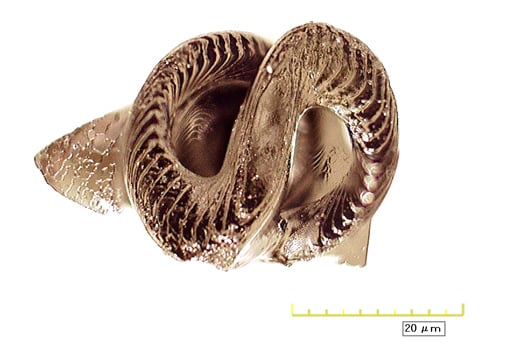

With 3D printing we are still working on items 1 and 2, barely touching 3. Some of the research labs approach item 4 on our list - firing, or its equivalents. For example, the MIT article that I've mentioned in the beginning of the post uses the ancient sequence of a clay-based technology: shape your piece from a soft material with special additives, then fire in the kiln, to achieve desired hardness and durability. Remarkably, modern 3D printing combines the ancient material — ceramics — with modern design techniques — computer modeling and manufacturing.

In the short term, 3D printing went through a lot of hype that fizzled a bit by now. In the long term, the age of 3D printing, just like the Clay Age, is going to create a strong foundation for a broad range of human technologies. Basically, we are in the hunter-gatherer stage of our 3D evolution curve.

tags: technology, innovation, history, invention, creativity